Overview

To confidently commit to deploying Fin, Intercom's AI agent, as their front-line customer support, businesses need assurance that it can respond accurately and appropriately in both content and tone across a broad range of real-world queries, just like a human agent. At the time, testing Fin's performance was a manual and slow process. Customers had to input one question at a time into a live preview to see how it would respond. This made it difficult for them to build the confidence needed to fully deploy Fin to their end users.

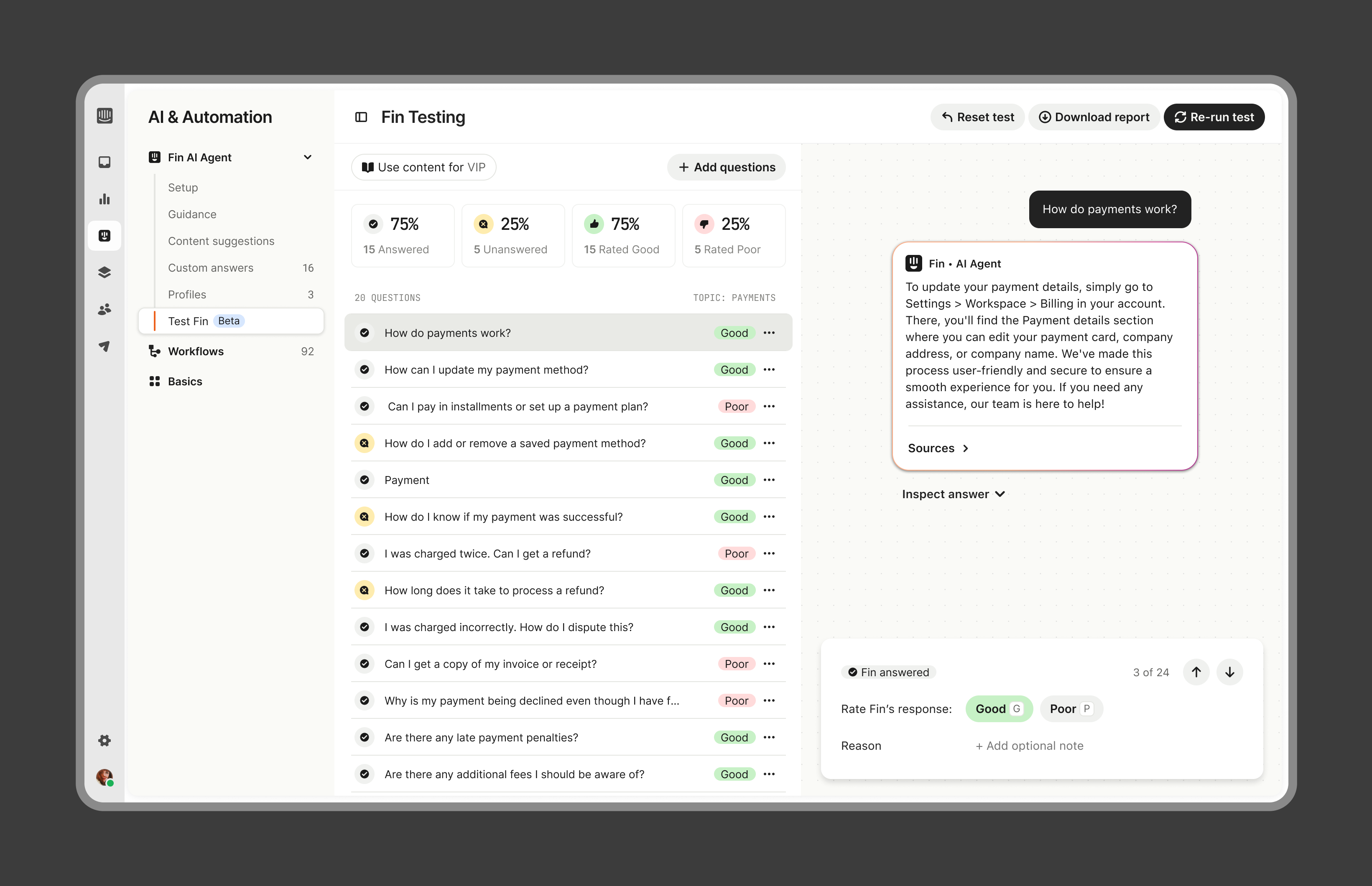

The opportunity: Design a tool that enables customers to test Fin's responses at scale, identify gaps in knowledge sources, and refine their support content, before setting Fin live.

Role

Staff Product Designer

- Took the initial concept to a shipped beta on a tight timeline,

- Designed key workflows: batch uploading of questions, reviewing and inspecting AI responses, and guided paths to improve support content,

- Created patterns for interpreting test results, surfacing response issues, and recommending content refinements.

Outcomes

- Launched Fin's testing tool to beta customers to validate product–market fit,

- The tool is now a core part of Fin's lifecycle workflow: analyze → train → test → deploy.